Business gurus say a pending B.C. Supreme Court docket circumstance could present clarity and most likely even set precedent on the use of AI styles like ChatGPT in Canada’s lawful procedure.

The large-profile scenario requires bogus scenario legislation created by ChatGPT and allegedly submitted to the court by a law firm in a large-internet-worthy of loved ones dispute. It is considered to be the initially of its form in Canada, though identical circumstances have surfaced in the United States.

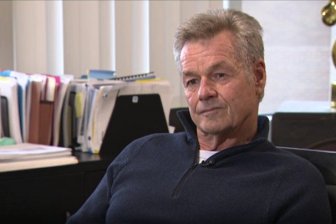

“It is severe in the perception that it is going to build a precedent and it’s going to give some guidance, and we’re heading to see in a few of strategies,” Jon Festinger, K.C., an adjunct professor with UBC’s Allard University of Legislation informed International News.

“There’s the courtroom continuing close to charges … The other part of this is the likelihood of discipline from the Legislation Modern society in phrases of this lawyer’s steps, and issues close to … legislation, what is the diploma of technological competence that legal professionals are envisioned to have, so some of that might turn into a lot more distinct close to this circumstance as properly.”

Law firm Chong Ke, who allegedly submitted the bogus cases, is now facing an investigation by the Legislation Modern society of B.C.

Breaking news from Canada and about the globe

sent to your email, as it occurs.

Breaking news from Canada and all around the planet

despatched to your e mail, as it comes about.

The opposing attorneys in the case she was litigating are also suing her personally for unique expenditures, arguing they must be compensated for the operate vital to uncover the truth that bogus instances ended up nearly entered into the authorized record.

Ke’s lawyer has informed the courtroom she created an “honest mistake” and that there is no prior case in Canada in which distinctive expenses had been awarded under comparable conditions.

Ke apologized to the court, expressing she was not conscious the synthetic intelligence chatbot was unreliable and she did not check to see if the instances basically existed.

UBC assistant professor of Laptop Science Vered Shwartz claimed the general public does not surface to be well more than enough educated on the prospective boundaries of new AI tools.

“There is a significant challenge with ChatGPT and other related AI products, language products: the hallucination problem,” she mentioned.

“These styles create text that appears really human-like, looks really factually accurate, proficient, coherent, but it might really contain glitches for the reason that these types were not experienced on any notion of the truth, they ended up just educated to generate textual content that seems to be human-like, appears to be like the textual content they examine.”

ChatGPT’s personal terms of use warn end users that the information generated might not be exact in some situations.

But Shwartz thinks the companies that deliver tools like ChatGPT will need to do a much better occupation of speaking their shortfalls, and that they need to not be applied for sensitive applications.

She mentioned the authorized technique also needs much more procedures about how these kinds of resources are used, and that until guardrails are in location the ideal alternative is probable to simply ban them.

“Even if another person employs them just to support with the crafting, they need to have to be dependable for the remaining output and they need to examine it and make confident the program did not introduce some factual mistakes,” she claimed.

“Unless everyone concerned would point-test every stage of the method, these matters may possibly go beneath the radar, it could have happened previously.”

Festinger mentioned that education and learning and instruction for lawyers about what AI instruments need to and shouldn’t be utilised for is important.

But he mentioned he remains hopeful about the technological innovation. He thinks additional specialised AI tools working specially with law and tested for accuracy could be offered in just the following ten years — a thing he stated would be a net beneficial for the general public when it arrives to entry to justice.

B.C. Supreme Court docket Justice David Masuhara is expected to supply a determination on Ke’s liability for expenses within the next two months.

— with documents from Rumina Daya

© 2024 Worldwide News, a division of Corus Leisure Inc.

More Stories

MIT study explains why laws are written in an incomprehensible style | MIT News

Israel’s Legal Strategy to Circumvent US Lobbying Disclosure Law Exposed – Israel News

Illinois Credit Card Swipe Fee Law Sparks Legal Fight With Banks